react-native-vision-camera人脸识别(人脸检测)方案

本文介绍了使用react-native-vision-camera实现人脸检测的方案,通过react-native-vision-camera-face-detector插件结合MLKit Vision进行人脸识别。关键步骤包括安装依赖、配置babel插件、解决匿名函数在worklet中的报错问题,并提供了完整的代码实现。文章详细说明了如何通过帧处理器检测人脸,处理角度、位置等验证条件,并包含拍照

react-native-vision-camera人脸识别(人脸检测)方案

官网地址VisionCamera Documentation | VisionCamera

我们要实现的检测人脸功能主要利用了react-native-vision-camera中的帧处理器,例如

const frameProcessor = useFrameProcessor((frame) => {

'worklet'

// dosomething

}, [])

首先安装需要的依赖

1、npx expo install react-native-vision-camera

2、npm i react-native-worklets-core

使用到了worklets工作线程

不要忘记在babel.config.js添加

module.exports = {

plugins: [

['react-native-worklets-core/plugin'],

],

}

3、npm i react-native-vision-camera-face-detector

一款 V4 帧处理器插件,可使用 MLKit Vision 人脸检测器进行人脸检测

react-native-vision-camera-face-detector库的github中提供了一段人脸检测的示例,2025-07-25号,我直接搬过来发现有报错

Frame Processor Error: Regular javascript function '' cannot be shared. Try decorating the function with the 'worklet' keyword to allow the javascript function to be used as a worklet., js engine: VisionCamera

报错说明无法识别函数,要求我们必须使用worklet关键词,但是我们明明已经加了worklet关键词了。(其实是无法识别匿名函数)

// github示例代码

const frameProcessor = useFrameProcessor((frame) => {

'worklet'

runAsync(frame, () => {

'worklet' //这里匿名函数标了worklet,但是不识别

const faces = detectFaces(frame)

// ... chain some asynchronous frame processor

// ... do something asynchronously with frame

handleDetectedFaces(faces)

})

// ... chain frame processors

// ... do something with frame

}, [handleDetectedFaces])

我看到有一些教程说使用react-native-reanimated中的runOnJS,这在运行时会直接造成应用崩溃,大概是这两个不一样的线程互相影响导致的吧。

正确处理👇

const [faces, setFaces] = useState<Face[]>([]);

const { detectFaces } = useFaceDetector();

const handleFacesJS = Worklets.createRunOnJS(setFaces);

const frameProcessor = useFrameProcessor(

(frame) => {

"worklet";

const faces = detectFaces(frame);

handleFacesJS(faces);

},

[detectFaces]

);

这样会正确的识别人脸信息,并存储到faces中

接下来处理常规的camera、device等其他。。具体看代码

完整案例(样式使用了NativeWind框架)

import React, { useEffect, useRef, useState } from "react";

import { Image, Pressable, Text, View } from "react-native";

import type { PhotoFile } from "react-native-vision-camera";

import {

Camera,

useCameraDevice,

useFrameProcessor,

} from "react-native-vision-camera";

import {

Face,

useFaceDetector,

} from "react-native-vision-camera-face-detector";

import { Worklets } from "react-native-worklets-core";

export default function App() {

const device = useCameraDevice("front");

const camera = useRef<Camera>(null);

const [faces, setFaces] = useState<Face[]>([]);

const [capturedPhoto, setCapturedPhoto] = useState<PhotoFile | null>(null);

// 新增权限状态

const [hasPermission, setHasPermission] = useState(false);

// 新增提示信息 state

const [faceTip, setFaceTip] = useState<string>("");

const { detectFaces } = useFaceDetector();

const handleFacesJS = Worklets.createRunOnJS(setFaces);

const frameProcessor = useFrameProcessor(

(frame) => {

"worklet";

const faces = detectFaces(frame);

handleFacesJS(faces);

},

[detectFaces]

);

const captureAndStop = async () => {

if (capturedPhoto) return;

try {

const photo = await camera.current?.takeSnapshot({

quality: 85,

});

if (photo) {

setCapturedPhoto(photo);

// 可以上传至后端等其他操作

// const formData = new FormData();

// formData.append("file", {

// uri: "file://" + photo.path,

// name: nanoid(10) + ".jpg",

// type: "image/jpeg",

// } as any);

}

} catch (e) {}

};

useEffect(() => {

if (capturedPhoto) {

setFaceTip("已识别成功");

return;

}

if (faces.length === 0) {

setFaceTip("未检测到人脸");

return;

}

if (faces.length > 1) {

setFaceTip("请确保只有你一人出现在画面中");

return;

}

const face = faces[0];

// 临时添加调试信息

console.log("Face bounds:", face.bounds);

console.log("Face angles:", {

yaw: face.yawAngle,

roll: face.rollAngle,

pitch: face.pitchAngle,

});

// 暂时注释掉位置检测,先测试其他条件

// const faceX = face.bounds.x;

// const faceY = face.bounds.y;

// const faceWidth = face.bounds.width;

// const faceHeight = face.bounds.height;

// 检查人脸大小是否合适

const faceWidth = face.bounds.width;

const faceHeight = face.bounds.height;

const faceArea = faceWidth * faceHeight;

// 使用相对面积判断距离(这个值可能需要调整)

if (faceArea < 5000) {

// 可能需要根据实际情况调整这个值

setFaceTip("请靠近摄像头");

return;

}

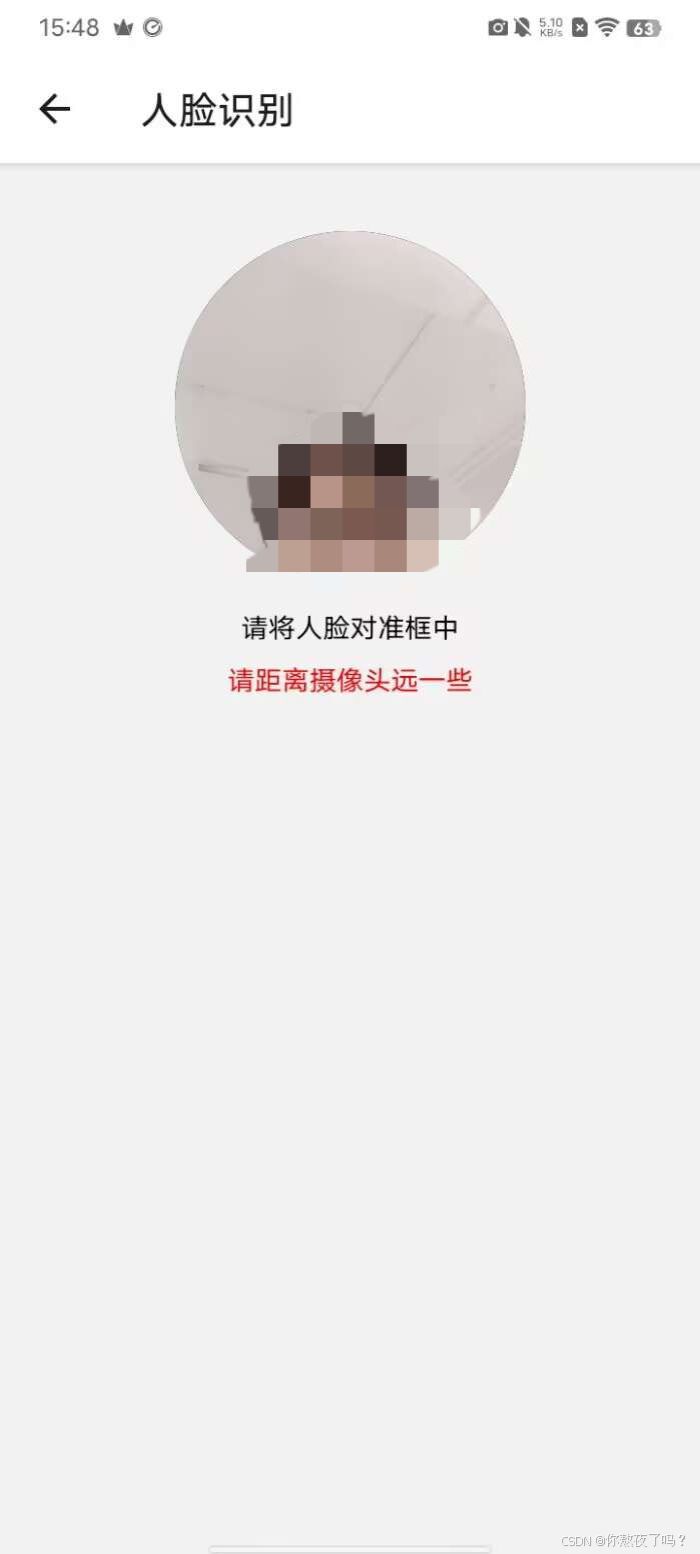

if (faceArea > 50000) {

// 可能需要根据实际情况调整这个值

setFaceTip("请距离摄像头远一些");

return;

}

// 检查人脸角度

const yawAngle = Math.abs(face.yawAngle ?? 0);

const rollAngle = Math.abs(face.rollAngle ?? 0);

const pitchAngle = Math.abs(face.pitchAngle ?? 0);

if (yawAngle > 15) {

setFaceTip("请正对摄像头,不要左右转头");

return;

}

if (rollAngle > 15) {

setFaceTip("请保持头部水平,不要倾斜");

return;

}

if (pitchAngle > 15) {

setFaceTip("请保持头部水平,不要上下点头");

return;

}

// 检查眼睛是否睁开

if (

face.leftEyeOpenProbability !== undefined &&

face.rightEyeOpenProbability !== undefined

) {

const leftEyeOpen = face.leftEyeOpenProbability > 0.7;

const rightEyeOpen = face.rightEyeOpenProbability > 0.7;

if (!leftEyeOpen || !rightEyeOpen) {

setFaceTip("请睁开双眼");

return;

}

}

// 所有条件都满足,清空提示并拍照

setFaceTip("");

captureAndStop();

}, [faces, capturedPhoto]);

useEffect(() => {

(async () => {

const status = await Camera.requestCameraPermission();

setHasPermission(status === "granted");

})();

}, []);

const handleRetake = () => {

setCapturedPhoto(null);

setFaces([]);

};

if (!hasPermission) {

return <Text>正在请求摄像头权限...</Text>;

}

if (device == null) {

return <Text>没有检测到摄像头</Text>;

}

if (capturedPhoto) {

return (

<View className="flex-1 py-10">

<View className="flex-row justify-center">

<View

style={{ width: 180, height: 180 }}

className=" overflow-hidden rounded-full"

>

<Image

source={{ uri: "file://" + capturedPhoto.path }}

style={{ width: 180, height: 180 }}

/>

</View>

</View>

<View className="w-full px-6">

<Pressable

className="w-full items-center mt-4 bg-blue-500 py-4 rounded-md"

onPress={handleRetake}

>

<Text className="text-white">重新检测</Text>

</Pressable>

</View>

</View>

);

}

return (

<View className="flex-1 py-10">

<View className="flex-row justify-center">

<View

style={{ width: 180, height: 180 }}

className=" overflow-hidden rounded-full"

>

<Camera

key={capturedPhoto ? "captured" : "live"}

ref={camera}

style={{ width: 180, height: 180 }}

device={device}

isActive={!capturedPhoto}

frameProcessor={!capturedPhoto ? frameProcessor : undefined}

pixelFormat="yuv"

/>

</View>

</View>

<View className="items-center mt-4">

<Text>请将人脸对准框中</Text>

{faceTip ? (

<Text style={{ color: "red", marginTop: 8 }}>{faceTip}</Text>

) : null}

</View>

</View>

);

}

识别人脸满足对应条件会条用captureAndStop方法拍照,临时图片存储在capturedPhoto,后续可执行其他业务逻辑。

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)