【深度学习笔记】pytorch官方文档整理

pytorch官网教程整理

·

官网教程:https://pytorch.org/tutorials/beginner/basics/quickstart_tutorial.html

2022.6.21

一、快速入门

1. 处理数据

torch.utils.data.DataLoader和torch.utils.data.Dataset。Dataset储存samples和label,DataLoader顾名思义,加载dataset中的数据。

每个dataset都有两个参数,transform和target_transform,用于修改样本和标签

有TorchText、TorchVision和TorchAudio的库

# Download training data from open datasets.

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),

)

# Download test data from open datasets.

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor(),

)

batch_size = 64

# Create data loaders.

train_dataloader = DataLoader(training_data, batch_size=batch_size)

test_dataloader = DataLoader(test_data, batch_size=batch_size)

for X, y in test_dataloader:

print(f"Shape of X [N, C, H, W]: {X.shape}")

print(f"Shape of y: {y.shape} {y.dtype}")

break

2. 创建模型

# Get cpu or gpu device for training.

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

# Define model

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10)

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork().to(device)

print(model)

3. 优化模型参数

# 先定义损失函数和optimizer

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=1e-3)

## 训练部分

def train(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

model.train()

for batch, (X, y) in enumerate(dataloader):

X, y = X.to(device), y.to(device)

# Compute prediction error

pred = model(X)

loss = loss_fn(pred, y)

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

## 测试部分

def test(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

model.eval()

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

X, y = X.to(device), y.to(device)

pred = model(X)

test_loss += loss_fn(pred, y).item()

correct += (pred.argmax(1) == y).type(torch.float).sum().item()

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

## 看每个epoch准确率和损失

epochs = 5

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train(train_dataloader, model, loss_fn, optimizer)

test(test_dataloader, model, loss_fn)

print("Done!")

4. 保存模型 加载模型

## 保存模型

torch.save(model.state_dict(), "model.pth")

print("Saved PyTorch Model State to model.pth")

## 加载模型

model = NeuralNetwork()

model.load_state_dict(torch.load("model.pth"))

## 用于预测

classes = [

"T-shirt/top",

"Trouser",

"Pullover",

"Dress",

"Coat",

"Sandal",

"Shirt",

"Sneaker",

"Bag",

"Ankle boot",

]

model.eval()

x, y = test_data[0][0], test_data[0][1]

with torch.no_grad():

pred = model(x)

predicted, actual = classes[pred[0].argmax(0)], classes[y]

print(f'Predicted: "{predicted}", Actual: "{actual}"')

二、张量 tensors

1. 初始化张量的4个方法

张量类似于NumPy 的ndarray

##

import torch

import numpy as np

### 1 自定义

data = [[1, 2],[3, 4]]

x_data = torch.tensor(data)

## 2 从nparray转化

np_array = np.array(data)

x_np = torch.from_numpy(np_array)

## 3 复制另一个张量

x_ones = torch.ones_like(x_data) # 保留原始属性

x_rand = torch.rand_like(x_data, dtype=torch.float) # 覆盖原始数据类型

### 4 使用随机数或者恒定值

shape = (2,3,)

rand_tensor = torch.rand(shape)

ones_tensor = torch.ones(shape)

zeros_tensor = torch.zeros(shape)

2. 张量的属性

tensor = torch.rand(3,4)

### 形状、数据类型和存储它们的设备

print(f"Shape of tensor: {tensor.shape}")

print(f"Datatype of tensor: {tensor.dtype}")

print(f"Device tensor is stored on: {tensor.device}")

3. 张量运算

默认情况下,张量是在 CPU 上创建的。需要使用 .to方法明确地将张量移动到 GPU

#

if torch.cuda.is_available():

tensor = tensor.to("cuda")

## 类似 numpy 的索引和切片,从0开始

tensor[:,1] = 0

## 连接张量 cat

t1 = torch.cat([tensor, tensor, tensor], dim=1)

4. 和numpy桥接

CPU 和 NumPy 数组上的张量可以共享它们的底层内存位置,改变一个会改变另一个。

三、 dataset和dataloader

可以用index索引 , 比如 training_data[index]

1. 自定义dataset

import os

import pandas as pd

from torchvision.io import read_image

class CustomImageDataset(Dataset):

def __init__(self, annotations_file, img_dir, transform=None, target_transform=None):

self.img_labels = pd.read_csv(annotations_file) ## csv保存标签

self.img_dir = img_dir ## 路径

self.transform = transform

self.target_transform = target_transform

-----------------

labels.csv file looks like:

tshirt1.jpg, 0

tshirt2.jpg, 0

......

ankleboot999.jpg, 9

------------------

def __len__(self):

return len(self.img_labels) # 样本数量

def __getitem__(self, idx):# idx意思是index

img_path = os.path.join(self.img_dir, self.img_labels.iloc[idx, 0])

image = read_image(img_path) ## 转成tensor

label = self.img_labels.iloc[idx, 1] ## 拿到label

if self.transform:

image = self.transform(image)

if self.target_transform:

label = self.target_transform(label)

return image, label # returns the tensor image and label in a tuple.

2. 用dataloader加载数据

dataset是全部的数据,训练时我们希望用minibatches减少过拟合,加快速度

from torch.utils.data import DataLoader

train_dataloader = DataLoader(training_data, batch_size=64, shuffle=True)

test_dataloader = DataLoader(test_data, batch_size=64, shuffle=True) ## 把数据加载到了dataloader中,可以 用dataloader进行迭代遍历数据集

# Display image and label.

train_features, train_labels = next(iter(train_dataloader))## 每次迭代都会返回一批train_features和train_labels

print(f"Feature batch shape: {train_features.size()}")

print(f"Labels batch shape: {train_labels.size()}")

img = train_features[0].squeeze() ## 这里应该是把图像转成 h*w

label = train_labels[0]

plt.imshow(img, cmap="gray")

plt.show()

print(f"Label: {label}")

四、变换 transform

对于训练,我们需要将特征转换为tensor格式并归一化到[0,1],并将标签用one-hot 编码(都是tensor)。使用ToTensor和Lambda。

import torch

from torchvision import datasets

from torchvision.transforms import ToTensor, Lambda

ds = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor(),## 转换成张量,将 PIL 图像或 NumPy ndarray转换为Float Tensor. 并在 [0., 1.] 范围内缩放图像的像素强度值

target_transform=Lambda(lambda y: torch.zeros(10, dtype=torch.float).scatter_(0, torch.tensor(y), value=1))## 用户定义的 lambda 函数,这里是首先创建一个大小为 10 的零张量(数据集中的标签数量)并调用 scatter_,在标签的 index 处设为1

)

五、构建模型

PyTorch中的每个模块都是 nn.Module 的子类。

1. 构建神经网络

import os

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets, transforms

### 1. 检查GPU可用性

device = "cuda" if torch.cuda.is_available() else "cpu"

print(f"Using {device} device")

-------------------

### 2.定义类

# 每个子类都要继承nn.Module,并写__init__方法,实现forward

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork().to(device)# 移动到GPU上

print(model)

---------

# 使用模型时就传入数据,然后执行forward操作,但是不能直接model.forward()

X = torch.rand(1, 28, 28, device=device)

logits = model(X)

pred_probab = nn.Softmax(dim=1)(logits) ## 使用softmax进行分类

y_pred = pred_probab.argmax(1)

print(f"Predicted class: {y_pred}")

2. 模型层 layer

## nn.Flatten:把2维数据展平成1维

flatten = nn.Flatten()

flat_image = flatten(input_image)

print(flat_image.size())

## nn.Linear 利用weights和biases实现线性变换 y = ax + b

layer1 = nn.Linear(in_features=28*28, out_features=20)

hidden1 = layer1(flat_image)

print(hidden1.size())

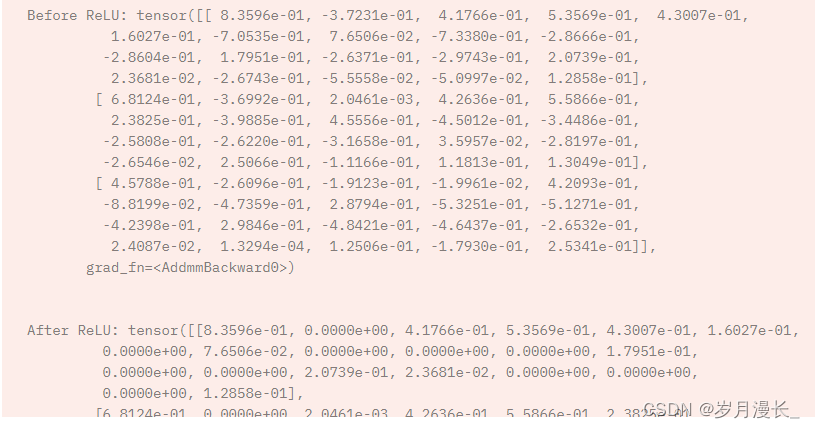

## nn.ReLU 非线性激活,负数全设为0

print(f"Before ReLU: {hidden1}\n\n")

hidden1 = nn.ReLU()(hidden1)

print(f"After ReLU: {hidden1}")

## nn.Sequential 按顺序堆层

seq_modules = nn.Sequential(

flatten,

layer1,

nn.ReLU(),

nn.Linear(20, 10)

)

input_image = torch.rand(3,28,28)

logits = seq_modules(input_image)

## nn.Softmax 输出[0, 1]之间的值,表示模型对每个类别的预测概率

softmax = nn.Softmax(dim=1) # dim 值必须总和为 1 的维度

pred_probab = softmax(logits)

###模型参数

# 使用parameters()或named_parameters()方法获取权重和偏置

print(f"Model structure: {model}\n\n")

for name, param in model.named_parameters():

print(f"Layer: {name} | Size: {param.size()} | Values : {param[:2]} \n")

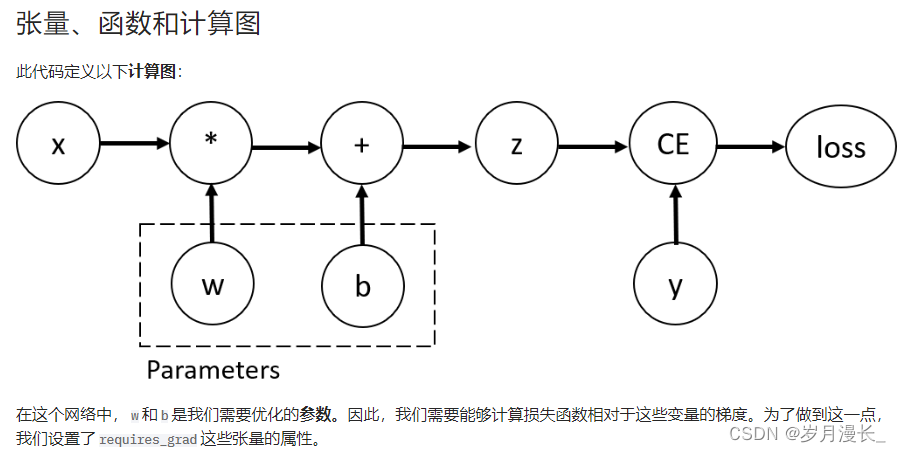

六、自动微分 TORCH.AUTOGRAD

训练神经网络时,最常用的算法是 反向传播。

import torch

x = torch.ones(5) # input tensor

y = torch.zeros(3) # expected output

w = torch.randn(5, 3, requires_grad=True)## requires_grad=True 表示这是需要优化的参数

b = torch.randn(3, requires_grad=True)

z = torch.matmul(x, w)+b

loss = torch.nn.functional.binary_cross_entropy_with_logits(z, y)

为了优化神经网络中参数的权重,我们需要计算我们的损失函数对参数的导数,为了计算这些导数,我们调用 loss.backward(),然后从w.grad和 b.grad 取得值。

## 计算梯度

loss.backward()

print(w.grad)

print(b.grad)

## 禁用梯度跟踪, 比如在应用模型时

z = torch.matmul(x, w)+b

print(z.requires_grad) # true

with torch.no_grad():

z = torch.matmul(x, w)+b

print(z.requires_grad) # false

七、优化模型参数

### 1. 先加载代码

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor, Lambda

training_data = datasets.FashionMNIST(

root="data",

train=True,

download=True,

transform=ToTensor()

)

test_data = datasets.FashionMNIST(

root="data",

train=False,

download=True,

transform=ToTensor()

)

train_dataloader = DataLoader(training_data, batch_size=64)

test_dataloader = DataLoader(test_data, batch_size=64)

class NeuralNetwork(nn.Module):

def __init__(self):

super(NeuralNetwork, self).__init__()

self.flatten = nn.Flatten()

self.linear_relu_stack = nn.Sequential(

nn.Linear(28*28, 512),

nn.ReLU(),

nn.Linear(512, 512),

nn.ReLU(),

nn.Linear(512, 10),

)

def forward(self, x):

x = self.flatten(x)

logits = self.linear_relu_stack(x)

return logits

model = NeuralNetwork()

### 2.超参数,比如epoch,batch size,lr (学习率learning_rate)

### 3.损失函数

# 常见的损失函数包括用于回归任务的nn.MSELoss(均方误差)和 用于分类的nn.NLLLoss(负对数似然)。 nn.CrossEntropyLoss结合了nn.LogSoftmax和nn.NLLLoss。

# Initialize the loss function

loss_fn = nn.CrossEntropyLoss() # 将模型的输出 logits 传递给 nn.CrossEntropyLoss

### 4.优化器

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

在训练循环中,优化分三个步骤进行:

- 调用

optimizer.zero_grad()以重置模型参数的梯度。默认情况下加起来;为了防止重复计算,我们在每次迭代时明确地将它们归零。 - 调用

loss.backward()来反向传播预测损失。PyTorch 存储每个参数的损失梯度。 - 一旦我们有了反向传播中收集的梯度,调用

optimizer.step()调整参数。

完整训练循环

# 1 训练loop

def train_loop(dataloader, model, loss_fn, optimizer):

size = len(dataloader.dataset)

for batch, (X, y) in enumerate(dataloader):

# Compute prediction and loss

pred = model(X)

loss = loss_fn(pred, y)

-----------------------这部分应该是固定的

# Backpropagation

optimizer.zero_grad()

loss.backward()

optimizer.step()

-------------------------

if batch % 100 == 0:

loss, current = loss.item(), batch * len(X)

print(f"loss: {loss:>7f} [{current:>5d}/{size:>5d}]")

# 2 测试loop

def test_loop(dataloader, model, loss_fn):

size = len(dataloader.dataset)

num_batches = len(dataloader)

test_loss, correct = 0, 0

with torch.no_grad():

for X, y in dataloader:

pred = model(X)

test_loss += loss_fn(pred, y).item()# 这里损失是累加的

correct += (pred.argmax(1) == y).type(torch.float).sum().item() # 准确率累加

test_loss /= num_batches

correct /= size

print(f"Test Error: \n Accuracy: {(100*correct):>0.1f}%, Avg loss: {test_loss:>8f} \n")

# 3 初始化损失函数和优化器,并将其传递给train_loop和test_loop。增加 epoch 的数量来跟踪模型的改进性能。

loss_fn = nn.CrossEntropyLoss()

optimizer = torch.optim.SGD(model.parameters(), lr=learning_rate)

epochs = 10

for t in range(epochs):

print(f"Epoch {t+1}\n-------------------------------")

train_loop(train_dataloader, model, loss_fn, optimizer)

test_loop(test_dataloader, model, loss_fn)

print("Done!")

八、保存和加载模型

保存和加载模型权重

PyTorch 模型将学习到的参数存储在内部状态字典中,称为state_dict. 这些可以通过以下torch.save 方法持久化:

### 保存模型

model = models.vgg16(pretrained=True)

torch.save(model.state_dict(), 'model_weights.pth')

### 要加载模型权重,首先需要创建一个相同模型的实例,然后使用load_state_dict()方法加载参数。

model = models.vgg16() # we do not specify pretrained=True, i.e. do not load default weights

model.load_state_dict(torch.load('model_weights.pth'))

model.eval()

### 在应用之前,请确保调用Model.eval()方法将dropout 和批处理标准化层设置为评估模式

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)