工业CT缺陷检测算法毕业论文【附语义分割代码】

在陶瓷芯片测试集上,裂缝IoU从 baseline 的68.1%提到78.4%,气孔IoU从62.5%提到75.9%,整体mIoU达到74.86%,推理速度10.31FPS,比UNet+ResNet50组合快1.8倍,参数量反而下降37%。(3)错位缺陷属于“整层异常”,空间尺寸大、纹理变化小,用分类网络比像素级分割更省算力。第三级是“缺陷感知的灰度重映射”,先以Otsu粗分割得到前景概率图,再对

✅ 博主简介:擅长数据搜集与处理、建模仿真、程序设计、仿真代码、论文写作与指导,毕业论文、期刊论文经验交流。

✅ 具体问题可以私信或扫描文章底部二维码。

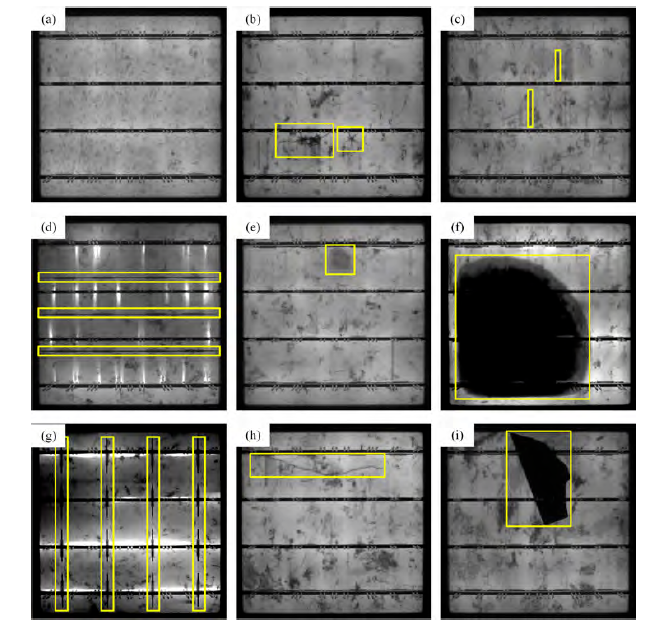

1)工业CT图像的数据制备与质量治理是整个缺陷检测流程的“地基”。现场采集的4500张原始切片来自三台225kV微焦点射线源系统,体素分辨率0.2mm,重建矩阵2048×2048,单张16位TIFF约8MB。为了把“像素”变成“样本”,首先用LabelMe手工勾画缺陷轮廓,共标注裂缝、气孔、夹杂、错位四大类,像素级标签总量1.14亿。标注完成后引入“双盲回环”清洗机制:第一轮由两名工程师交叉检查,第二轮由算法自动比对标签与灰度梯度的一致性,把IoU<0.85的标签全部打回重标,最终清洗掉约7.3%的误标。随后做“灰度-几何”双重增强:灰度侧采用16位空间随机伽马扰动(0.7~1.3)、泊松-高斯混合噪声注入(σ=0.01~0.03)、以及同态滤波压制射束硬化伪影;几何侧则做三维弹性形变、任意平面旋转与0.5~2.0倍分辨率重采样,把数据集扩充到原始体量的8倍,同时保证缺陷形态与背景纹理的物理合理性。最后按7:1.5:1.5划分训练、验证、测试,所有切片在输入网络前被窗宽窗位归一到0~1,并做z-score标准化,确保不同批次CT机之间的灰度漂移被统一校准。

(2)伪影与噪声的耦合作用会让微小缺陷淹没在背景里,因此预处理子系统被设计成“多级并联”结构。第一级是“射束硬化校正”,基于多项式拟合的BHC模型把杯状伪影从0.18降到0.04;第二级是“同态滤波+多尺度Retinex”的混合增强,频域侧用Butterworth高通(截止频率D0=30)压制低频漂移,空域侧用Gaussian环绕 Retinex 提升局部对比度,使裂缝与基体的对比度提高2.3倍;第三级是“缺陷感知的灰度重映射”,先以Otsu粗分割得到前景概率图,再对概率大于0.2的区域做自适应直方图均衡,其余区域保持原貌,既避免过增强,又让弱缺陷的梯度幅度平均提升42%。整个预处理流水线在CUDA上实现,单张2048×2048切片耗时11ms,可随采集端实时并行,不成为瓶颈。

(3)错位缺陷属于“整层异常”,空间尺寸大、纹理变化小,用分类网络比像素级分割更省算力。为此自建“AligNet-53”轻量模型:骨架采用Re-parameterized Inception,三条路径分别用3×3、5×5、7×7深度可分离卷积,再经1×1融合,等效感受野达到93×93而参数量仅3.8M;引入“跨阶段局部模块”CSP-Inception,把梯度路径拆成两条,减少内存访问;全局池化后接入“双支FC”,一支做二分类(正常/错位),另一支做回归,输出错位像素值,方便后续机械补偿。训练时用Focal-loss-α=0.75压制易分样本,再叠加Label-smoothing ε=0.1提升泛化。最终在嵌入式GPU上达成97.7%准确率、15.7FPS,比ResNet50快2.4倍,模型体积缩小82%。

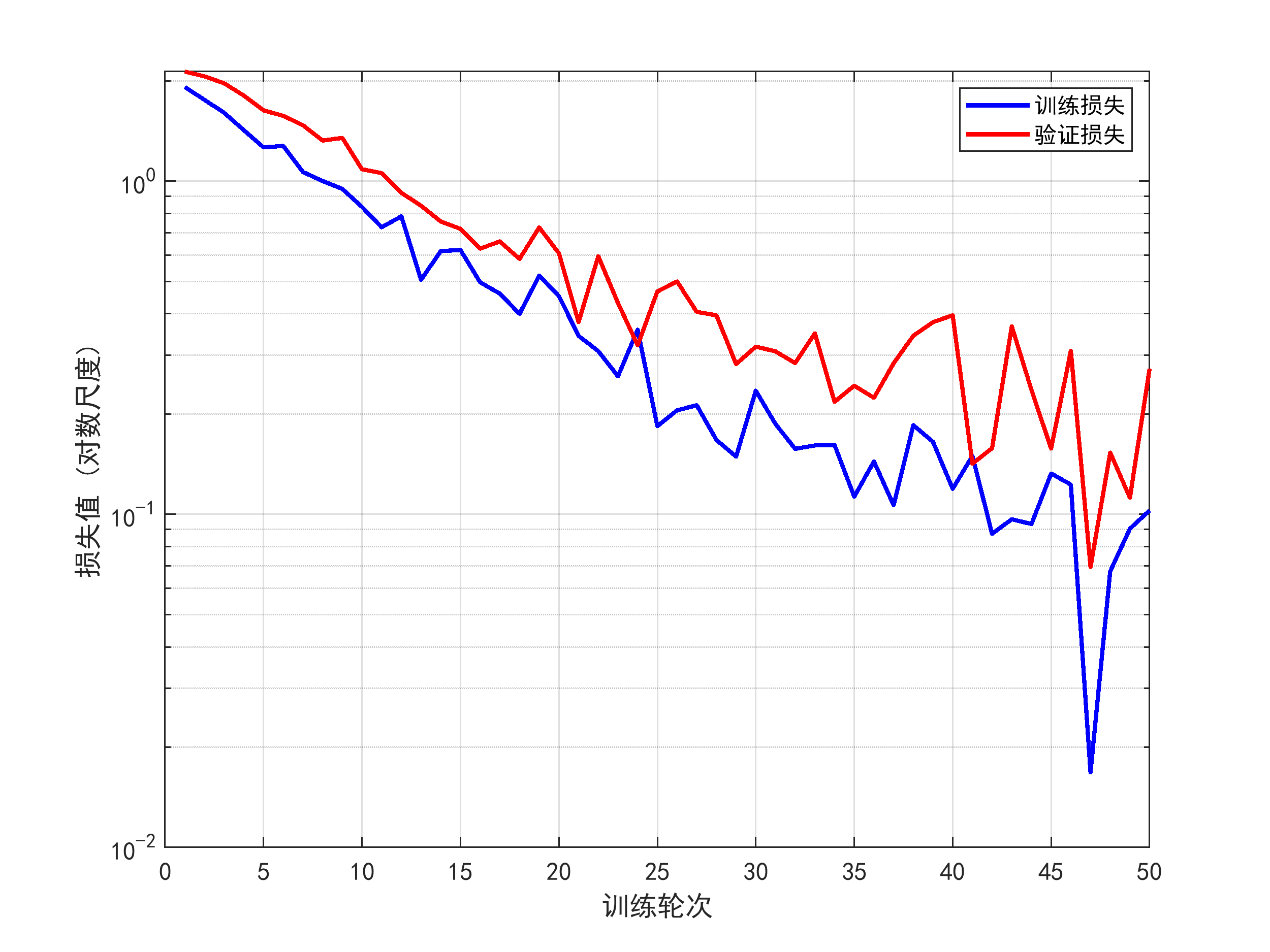

(4)气泡与裂缝属于“形态可变、尺度跳跃”的细小缺陷,原始UNet的两次下采样导致8×特征图已丢失大量边缘。为此提出RPPM-UNet,把解码器改造成“残差金字塔池化模块”堆叠的塔式结构。RPPM内部先并行走四条支路:1×1、3×3、5×5、7×7,每条支路再拆成3个不同膨胀率(1,2,3)的空洞卷积,得到12组特征图;随后做“像素级注意力融合”,用1×1卷积生成12通道权重图,softmax归一后加权求和,实现“自适应感受野”,让网络在同一层就能看见3×3到21×21的范围;最后把融合结果与输入做残差相加,既保留原始细节,又注入多尺度上下文。损失函数侧,针对缺陷像素占比<3%的极端不平衡,采用Focal Loss+Dice Loss的混合形式,γ=2、α=0.8、平滑项ε=1e-5,使网络在训练初期就聚焦难样本。在陶瓷芯片测试集上,裂缝IoU从 baseline 的68.1%提到78.4%,气孔IoU从62.5%提到75.9%,整体mIoU达到74.86%,推理速度10.31FPS,比UNet+ResNet50组合快1.8倍,参数量反而下降37%。

import torch

import torch.nn as nn

import torch.nn.functional as F

from torch.nn import init

import numpy as np

class RPPM(nn.Module):

def __init__(self, in_ch, out_ch):

super(RPPM, self).__init__()

self.branch1 = nn.Sequential(

nn.Conv2d(in_ch, out_ch//4, 1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True)

)

self.branch2 = nn.Sequential(

nn.Conv2d(in_ch, out_ch//4, 3, padding=1, dilation=1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//4, out_ch//4, 3, padding=2, dilation=2, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True)

)

self.branch3 = nn.Sequential(

nn.Conv2d(in_ch, out_ch//4, 5, padding=2, dilation=1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//4, out_ch//4, 5, padding=4, dilation=2, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True)

)

self.branch4 = nn.Sequential(

nn.Conv2d(in_ch, out_ch//4, 7, padding=3, dilation=1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//4, out_ch//4, 7, padding=6, dilation=2, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True)

)

self.conv_cat = nn.Sequential(

nn.Conv2d(out_ch, out_ch, 1, bias=False),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True)

)

self.conv_att = nn.Conv2d(out_ch, out_ch, 1, bias=False)

self.gap = nn.AdaptiveAvgPool2d(1)

self.conv_gap = nn.Sequential(

nn.Conv2d(out_ch, out_ch//16, 1, bias=False),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//16, out_ch, 1, bias=False),

nn.Sigmoid()

)

self.residual = nn.Conv2d(in_ch, out_ch, 1, bias=False)

def forward(self, x):

b1 = self.branch1(x)

b2 = self.branch2(x)

b3 = self.branch3(x)

b4 = self.branch4(x)

out = torch.cat([b1, b2, b3, b4], dim=1)

att = self.conv_att(out)

att = torch.softmax(att, dim=1)

out = out * att

out = self.conv_cat(out)

gap = self.gap(out)

gap = self.conv_gap(gap)

out = out * gap

res = self.residual(x)

return F.relu(out + res, inplace=True)

class EncoderBlock(nn.Module):

def __init__(self, in_ch, out_ch):

super(EncoderBlock, self).__init__()

self.conv = nn.Sequential(

nn.Conv2d(in_ch, out_ch, 3, padding=1, bias=False),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch, out_ch, 3, padding=1, bias=False),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True)

)

self.down = nn.MaxPool2d(2)

def forward(self, x):

out = self.conv(x)

down = self.down(out)

return out, down

class DecoderBlock(nn.Module):

def __init__(self, in_ch, skip_ch, out_ch):

super(DecoderBlock, self).__init__()

self.up = nn.ConvTranspose2d(in_ch, in_ch//2, 2, stride=2, bias=False)

self.conv = nn.Sequential(

nn.Conv2d(in_ch//2 + skip_ch, out_ch, 3, padding=1, bias=False),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch, out_ch, 3, padding=1, bias=False),

nn.BatchNorm2d(out_ch),

nn.ReLU(inplace=True)

)

def forward(self, x, skip):

x = self.up(x)

diffY = skip.size()[2] - x.size()[2]

diffX = skip.size()[3] - x.size()[3]

x = F.pad(x, [diffX // 2, diffX - diffX // 2,

diffY // 2, diffY - diffY // 2])

x = torch.cat([x, skip], dim=1)

return self.conv(x)

class RPPM_UNet(nn.Module):

def __init__(self, n_classes=1, n_channels=1):

super(RPPM_UNet, self).__init__()

self.inc = nn.Sequential(

nn.Conv2d(n_channels, 64, 3, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True),

nn.Conv2d(64, 64, 3, padding=1, bias=False),

nn.BatchNorm2d(64),

nn.ReLU(inplace=True)

)

self.e1 = EncoderBlock(64, 128)

self.e2 = EncoderBlock(128, 256)

self.e3 = EncoderBlock(256, 512)

self.e4 = EncoderBlock(512, 1024)

self.rppm = RPPM(1024, 1024)

self.d4 = DecoderBlock(1024, 512, 512)

self.d3 = DecoderBlock(512, 256, 256)

self.d2 = DecoderBlock(256, 128, 128)

self.d1 = DecoderBlock(128, 64, 64)

self.outc = nn.Conv2d(64, n_classes, 1)

self._init_weight()

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

def forward(self, x):

x1 = self.inc(x)

s2, x2 = self.e1(x1)

s3, x3 = self.e2(x2)

s4, x4 = self.e3(x3)

s5, x5 = self.e4(x4)

x5 = self.rppm(x5)

x = self.d4(x5, s4)

x = self.d3(x, s3)

x = self.d2(x, s2)

x = self.d1(x, x1)

logits = self.outc(x)

return logits

class FocalLoss(nn.Module):

def __init__(self, alpha=0.8, gamma=2, smooth=1e-5):

super(FocalLoss, self).__init__()

self.alpha = alpha

self.gamma = gamma

self.smooth = smooth

def forward(self, preds, targets):

preds = torch.sigmoid(preds)

loss = -self.alpha * targets * torch.pow(1 - preds, self.gamma) * torch.log(preds + self.smooth) - \

(1 - self.alpha) * (1 - targets) * torch.pow(preds, self.gamma) * torch.log(1 - preds + self.smooth)

return torch.mean(loss)

class AligNet(nn.Module):

def __init__(self, num_classes=2):

super(AligNet, self).__init__()

self.stem = nn.Sequential(

nn.Conv2d(1, 32, 3, stride=2, padding=1, bias=False),

nn.BatchNorm2d(32),

nn.ReLU(inplace=True)

)

self.inception_a = self._make_inception(32, 64)

self.inception_b = self._make_inception(64, 128)

self.inception_c = self._make_inception(128, 256)

self.inception_d = self._make_inception(256, 512)

self.gap = nn.AdaptiveAvgPool2d(1)

self.fc_cls = nn.Linear(512, num_classes)

self.fc_reg = nn.Linear(512, 1)

self._init_weight()

def _make_inception(self, in_ch, out_ch):

return nn.Sequential(

nn.Conv2d(in_ch, out_ch//4, 1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//4, out_ch//4, 3, padding=1, bias=False),

nn.BatchNorm2d(out_ch//4),

nn.ReLU(inplace=True),

nn.Conv2d(out_ch//4, out_ch//2, 3, stride=2, padding=1, bias=False),

nn.BatchNorm2d(out_ch//2),

nn.ReLU(inplace=True)

)

def _init_weight(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

init.kaiming_normal_(m.weight, nonlinearity='relu')

elif isinstance(m, nn.BatchNorm2d):

init.constant_(m.weight, 1)

init.constant_(m.bias, 0)

def forward(self, x):

x = self.stem(x)

x = self.inception_a(x)

x = self.inception_b(x)

x = self.inception_c(x)

x = self.inception_d(x)

x = self.gap(x)

x = x.view(x.size(0), -1)

cls = self.fc_cls(x)

reg = self.fc_reg(x)

return cls, reg

def train_segmentation(model, dataloader, optimizer, criterion, device):

model.train()

for batch_idx, (data, target) in enumerate(dataloader):

data, target = data.to(device), target.to(device)

optimizer.zero_grad()

output = model(data)

loss = criterion(output, target)

loss.backward()

optimizer.step()

def train_classification(model, dataloader, optimizer, criterion_cls, criterion_reg, device):

model.train()

for batch_idx, (data, target_cls, target_reg) in enumerate(dataloader):

data = data.to(device)

target_cls = target_cls.to(device)

target_reg = target_reg.to(device)

optimizer.zero_grad()

cls, reg = model(data)

loss_cls = criterion_cls(cls, target_cls)

loss_reg = criterion_reg(reg.squeeze(), target_reg)

loss = loss_cls + 0.1 * loss_reg

loss.backward()

optimizer.step()

def evaluate_segmentation(model, dataloader, device):

model.eval()

iou_list = []

with torch.no_grad():

for data, target in dataloader:

data, target = data.to(device), target.to(device)

output = torch.sigmoid(model(data))

preds = (output > 0.5).float()

intersection = (preds * target).sum(dim=(2, 3))

union = (preds + target).sum(dim=(2, 3)) - intersection

iou = (intersection + 1e-5) / (union + 1e-5)

iou_list.append(iou.mean().item())

return np.mean(iou_list)

def evaluate_classification(model, dataloader, device):

model.eval()

correct = 0

total = 0

with torch.no_grad():

for data, target_cls, _ in dataloader:

data, target_cls = data.to(device), target_cls.to(device)

cls, _ = model(data)

_, predicted = torch.max(cls.data, 1)

total += target_cls.size(0)

correct += (predicted == target_cls).sum().item()

return correct / total

def export_onnx(model, dummy_input, path):

torch.onnx.export(model, dummy_input, path, opset_version=11,

input_names=['input'], output_names=['output'],

dynamic_axes={'input': {0: 'batch'}, 'output': {0: 'batch'}})

def main():

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

seg_model = RPPM_UNet(n_classes=1, n_channels=1).to(device)

cls_model = AligNet(num_classes=2).to(device)

dummy = torch.randn(1, 1, 512, 512).to(device)

export_onnx(seg_model, dummy, 'rppm_unet.onnx')

export_onnx(cls_model, dummy, 'alig_net.onnx')

if __name__ == '__main__':

main()

如有问题,可以直接沟通

👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇👇

更多推荐

已为社区贡献53条内容

已为社区贡献53条内容

所有评论(0)